Part B: Exploratory Data Analysis (EDA)

Multi-Topic Text Classification with Various Deep Learning Models

Author: Murat Karakaya

Date created….. 17 09 2021

Date published… 13 03 2022

Last modified…. 12 03 2022

Description: This is the Part B of the tutorial series “Multi-Topic Text Classification with Various Deep Learning Models” that covers all the phases of text classification:

- Exploratory Data Analysis (EDA),

- Text preprocessing

- TF Data Pipeline

- Keras TextVectorization preprocessing layer

- Multi-class (multi-topic) text classification

- Deep Learning model design & end-to-end model implementation

- Performance evaluation & metrics

- Generating classification report

- Hyper-parameter tuning

- etc.

We will design various Deep Learning models by using

- the Keras Embedding layer,

- Convolutional (Conv1D) layer,

- Recurrent (LSTM) layer,

- Transformer Encoder block, and

- pre-trained transformer (BERT).

We will cover all the topics related to solving Multi-Class Text Classification problems with sample implementations in Python / TensorFlow / Keras environment.

We will use a Kaggle Dataset in which there are 32 topics and more than 400K total reviews.

If you would like to learn more about Deep Learning with practical coding examples,

- Please subscribe to the Murat Karakaya Akademi YouTube Channel or

- Do not forget to turn on notifications so that you will be notified when new parts are uploaded.

- Follow my blog on muratkarakaya.net

You can access all the codes, videos, and posts of this tutorial series from the links below.

PART B: EXPLORATORY DATA ANALYSIS (EDA)

In this tutorial, I will use a Multi-Class Classification Dataset for Turkish. It is a benchmark dataset for the Turkish text classification task.

It contains 430K comments (reviews or complaints) for a total of 32 categories (products or services).

Each category roughly has 13K comments.

A baseline algorithm, Naive Bayes, gets an 84% F1 score.

However, you can download and use any other multi-class text datasets as well.

Load Stop Words in Turkish

As you might know “Stop words” are a set of commonly used words in a language. Examples of stop words in English are “a”, “the”, “is”, “are” and etc. Stop words are commonly used in Text Mining and Natural Language Processing (NLP) to eliminate words that are so commonly used that they carry very little useful information.

I begin with uploading an existing list of stop words in Turkish but this list is not an exhaustive one. We will add new words to this list after analyzing the dataset.

You can download this file from here.

tr_stop_words = pd.read_csv('tr_stop_word.txt',header=None)

print("First 5 entries:")

for each in tr_stop_words.values[:5]:

print(each[0])First 5 entries:

ama

amma

anca

ancak

bu

time: 212 ms (started: 2022-03-01 12:14:57 +00:00)

Load the Dataset

data = pd.read_csv('ticaret-yorum.csv')

pd.set_option('max_colwidth', 400)time: 6.11 s (started: 2022-03-01 12:14:58 +00:00)

Some Samples of Reviews (Text) & Their Corresponding Topics (Class)

data.head()

time: 47 ms (started: 2022-03-01 12:15:04 +00:00)Explore the Basic Properties of the Dataset

The shape of the dataset

print("Shape of data (rows, cols)=>",data.shape)Shape of data (rows, cols)=> (431306, 2)

time: 2.86 ms (started: 2022-03-01 12:15:04 +00:00)

Check the Null Values

Get the initial information about the dataset:

data.info()<class 'pandas.core.frame.DataFrame'>

RangeIndex: 431306 entries, 0 to 431305

Data columns (total 2 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 category 431306 non-null object

1 text 431306 non-null object

dtypes: object(2)

memory usage: 6.6+ MB

time: 208 ms (started: 2022-03-01 12:15:04 +00:00)

According to data.info() there is no null values in the dataset but let's verify it:

data.isnull().sum()category 0

text 0

dtype: int64

time: 113 ms (started: 2022-03-01 12:15:04 +00:00)

Notice that according to the above numbers there are no null values in the dataset!

If there are any null values in the dataset, we could drop these null values as follows:

df.dropna(inplace=True)

df.isnull().sum()

Check the Duplicated Reviews

Let’s first find if there are any duplicated records

data.describe(include='all')

time: 1.25 s (started: 2022-03-01 12:15:04 +00:00)For category column there are 431306 rows and 32 unique values. However, for text column, there exists 431306 rows of which 427231 entries are unique. That is, for text column, there are some duplications: 431306 - 427231 = 4075

We can verify the duplications:

data.text.duplicated(keep="first").value_counts()False 427231

True 4075

Name: text, dtype: int64

time: 120 ms (started: 2022-03-01 12:15:05 +00:00)

Drop the duplicated reviews:

data.drop_duplicates(subset="text",keep="first",inplace=True,ignore_index=True)

data.describe()

time: 2.93 s (started: 2022-03-01 12:15:06 +00:00)Analyze the Classes (Topics)

Topic List

topic_list = data.category.unique()

print("Topics:\n", topic_list)Topics:

['alisveris' 'anne-bebek' 'beyaz-esya' 'bilgisayar' 'cep-telefon-kategori'

'egitim' 'elektronik' 'emlak-ve-insaat' 'enerji'

'etkinlik-ve-organizasyon' 'finans' 'gida' 'giyim' 'hizmet-sektoru'

'icecek' 'internet' 'kamu-hizmetleri' 'kargo-nakliyat'

'kisisel-bakim-ve-kozmetik' 'kucuk-ev-aletleri' 'medya'

'mekan-ve-eglence' 'mobilya-ev-tekstili' 'mucevher-saat-gozluk'

'mutfak-arac-gerec' 'otomotiv' 'saglik' 'sigortacilik' 'spor' 'temizlik'

'turizm' 'ulasim']

time: 52.4 ms (started: 2022-03-01 12:15:09 +00:00)

Number of Topics

number_of_topics = len(topic_list)

print("Number of Topics: ",number_of_topics)Number of Topics: 32

time: 4.66 ms (started: 2022-03-01 12:15:09 +00:00)

Number of Reviews per Topic

The number of reviews in each category:

data.category.value_counts()kamu-hizmetleri 13998

cep-telefon-kategori 13975

enerji 13968

finans 13958

ulasim 13943

medya 13908

kargo-nakliyat 13877

mutfak-arac-gerec 13867

alisveris 13816

mekan-ve-eglence 13807

elektronik 13770

beyaz-esya 13761

kucuk-ev-aletleri 13732

giyim 13676

internet 13657

icecek 13564

saglik 13559

sigortacilik 13486

spor 13448

mobilya-ev-tekstili 13434

otomotiv 13377

turizm 13317

egitim 13264

gida 13150

temizlik 13111

mucevher-saat-gozluk 12964

bilgisayar 12963

kisisel-bakim-ve-kozmetik 12657

anne-bebek 12381

emlak-ve-insaat 12024

hizmet-sektoru 11463

etkinlik-ve-organizasyon 11356

Name: category, dtype: int64

time: 57.4 ms (started: 2022-03-01 12:15:09 +00:00)

Let’s depict the number of reviews per topic as a bar chart:

data.category.value_counts().plot.bar(x="Topics",y="Number of Reviews",figsize=(32,6) )<matplotlib.axes._subplots.AxesSubplot at 0x7f40bce1d510>

time: 1.36 s (started: 2022-03-01 12:15:09 +00:00)As you see in the above numbers, we can argue that the dataset is a balanced one: the number of samples is evenly distributed over the topics.

Analyze Reviews (Text)

Some Review Samples

data.head(5)

time: 21.5 ms (started: 2022-03-01 12:15:10 +00:00)Calculate the number of words in each review

data['words'] = [len(x.split()) for x in data['text'].tolist()]time: 4.7 s (started: 2022-03-01 12:15:10 +00:00)data[['words','text']].head()

time: 52.6 ms (started: 2022-03-01 12:15:15 +00:00)Notice that most of the reviews end with “Devamını oku” (Read next). We will remove this repeated expression below!

Review Length in terms of Number of Words

data['words'].describe()count 427231.000000

mean 44.408624

std 8.108499

min 2.000000

25% 42.000000

50% 46.000000

75% 49.000000

max 183.000000

Name: words, dtype: float64

time: 32.8 ms (started: 2022-03-01 12:15:15 +00:00)

Note that:

- 75% of the reviews have less than 50 words.

- The longest review has 183 words.

We could (will) use these statistics

- to filter out some of the reviews,

- to determine the maximum review size

- etc.

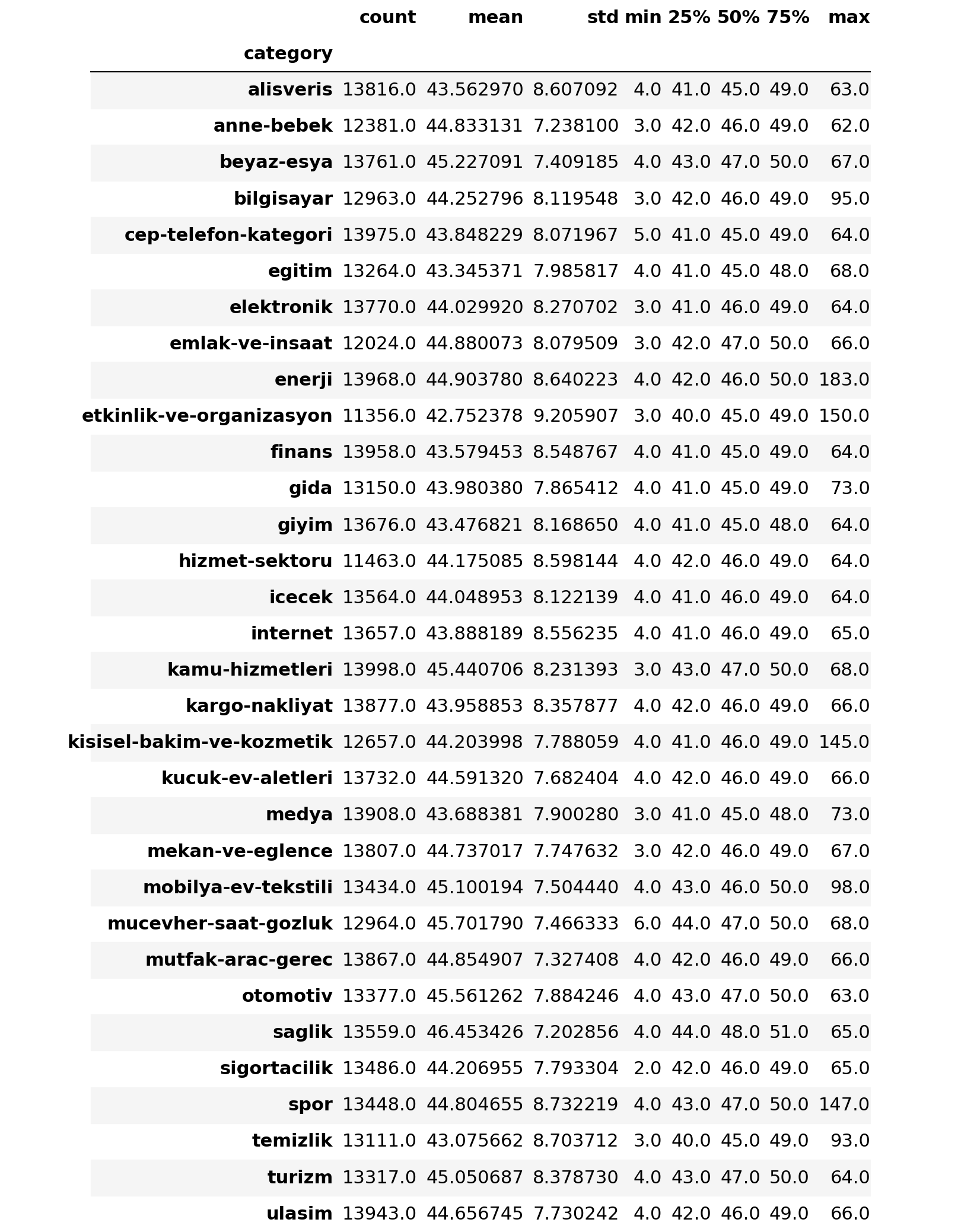

Review Length in terms of Number of Words per Topic

data.groupby(['category'])['words'].describe()

time: 379 ms (started: 2022-03-01 12:15:15 +00:00)We observe that

- Only 9 out of 32 topics have longer reviews than 70 words.

- For all the topics, 75% of the reviews have less than 50 words.

That is, we can limit the reviews to 50 or 70 words.

Number of short reviews

We can check the number of short reviews by comparing a threshold:

min_review_size = 15

data[data['words']<min_review_size].count()category 4950

text 4950

words 4950

dtype: int64

time: 64.3 ms (started: 2022-03-01 12:15:15 +00:00)

Let’s see some short review samples:

data[data['words']<min_review_size]

time: 84.9 ms (started: 2022-03-01 12:15:15 +00:00)Decide minimum and maximum review size

Important: In some tasks, we can assume that short reviews can not convey necessary /enough information for training and testing an ML model. For example, in Text Generation, if you would like to generate longer text, you would prefer to train your model with longer text examples.

As a result, according to the ML task at hand, you can use the above statistics, to set up a minimum and maximum review size in terms of words.

Here, I set these parameters as follows:

min_review_size = 15

max_review_size = 40 #50time: 1.47 ms (started: 2022-03-01 12:15:16 +00:00)

Filter out the short reviews

In the initial raw data, after removing duplications, we have 427231 reviews.

data.count()category 427231

text 427231

words 427231

dtype: int64

time: 189 ms (started: 2022-03-01 12:15:16 +00:00)

Above, we observed that we have 4950 reviews whose length is less than min_review_size (15 words).

Let’s remove short reviews:

data= data[data['words']>=min_review_size]time: 49.9 ms (started: 2022-03-01 12:15:16 +00:00)

After filtering out these short reviews, we will end up with (427231–4950) 422281 as below:

data.count()category 422281

text 422281

words 422281

dtype: int64

time: 311 ms (started: 2022-03-01 12:15:16 +00:00)

Finally, verify that there is no review whose length is less than min_review_size

data[data['words']<min_review_size].count()category 0

text 0

words 0

dtype: int64

time: 31.9 ms (started: 2022-03-01 12:15:16 +00:00)

Trim the longer reviews

We will trim the longer reviews using Keras TextVectorization layer below.

Analyze the Vocabulary

Count the distinct words

Let’s look into the raw dataset to count the distinct words

vocab = set()

corpus= [x.split() for x in data['text'].tolist()]

for sentence in corpus:

for word in sentence:

vocab.add(word.lower())

print("Number of distinct words in raw data: ", len(vocab))Number of distinct words in raw data: 900327

time: 25.8 s (started: 2022-03-01 12:15:16 +00:00)

Note: This number is huge actually. If you investigate some vocabulary entries, you would see that there are several reasons for this big number:

list(vocab)[:25]['kırılmıştı)',

'yıkıyor,"alalı',

'bulamama,"w118949152',

'+1lt',

'1.30₺',

"oylat'taki",

'sistemse?',

'jeansten',

'i***r*...devamını',

'yokmuş,"11',

'hama',

'yollanmaması,"28',

'çıkarıcısıdır,',

'güncellemediniz.',

'duyarsızlığı,metroport',

'hırıltısı',

'mahvolurdu',

'resetle',

'ödeyerek!devamını',

'raporunda,2010',

'i̇ndirdi,can',

'kredi',

'etkinleştirdim',

'yaşayacağımız',

'azarlanacaklarını']

time: 156 ms (started: 2022-03-01 12:15:42 +00:00)

- There are some typos in the reviews so the same word is misspelled and counted as a new word several times such as “haksızlık” vs “hakszlık”.

- Some reviewers use the Turkish alphabet some use the English alphabet to write the same words such as “özgün” vs “ozgun”

- There are many stop words in Turkish :) We will apply several workarounds to remove such words in the text during preprocessing below.

NOTE that:

- we are just analyzing the data, we are not preprocessing it yet!

- we will use a much better method to split the text into tokens in preprocessing phase later.

- thus, here, we just aim to get familiar with the data at hand before starting to preprocess it.

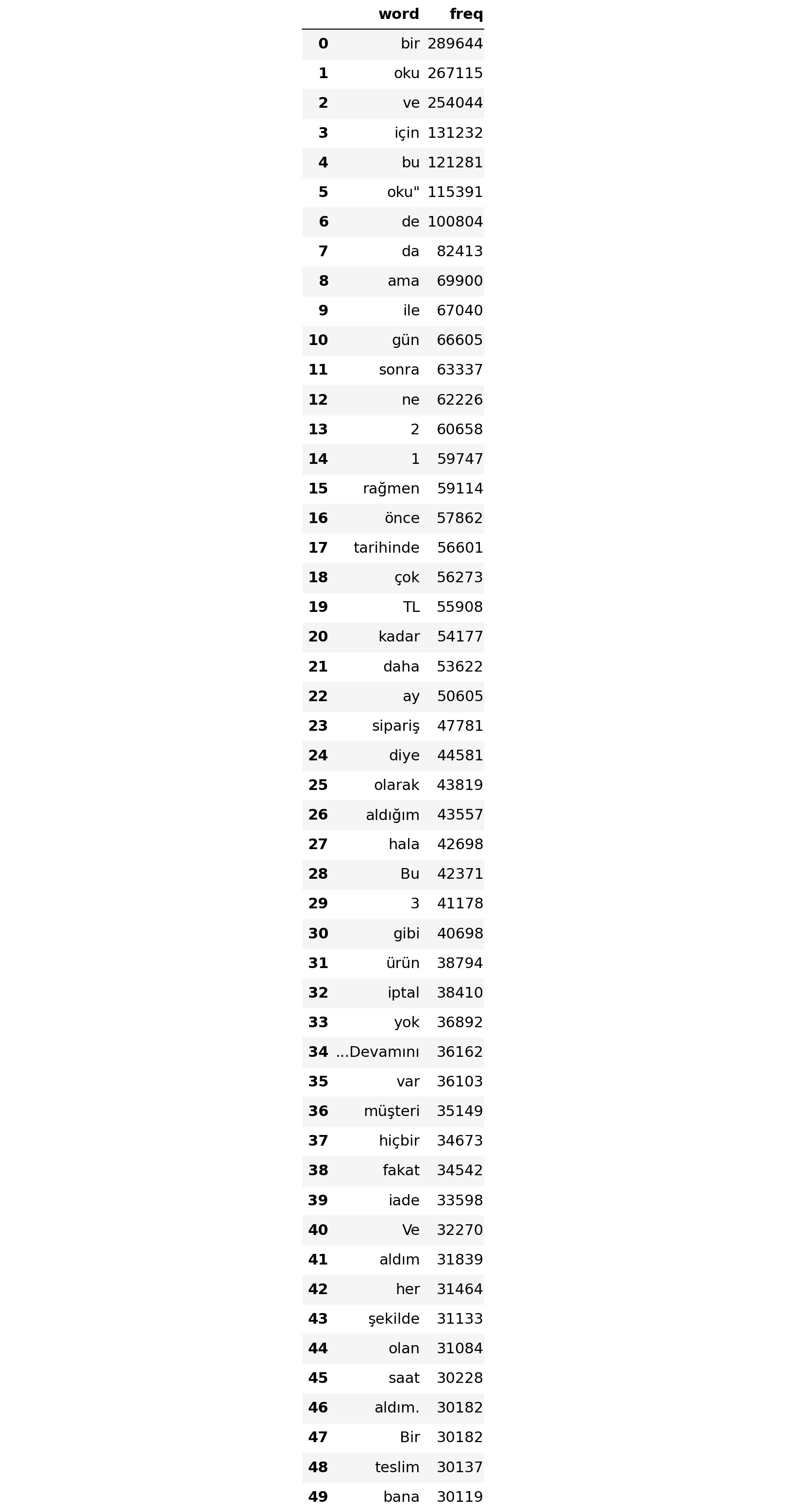

Count the frequency of words in the raw dataset

word_freq= data.text.str.split(expand=True).stack().value_counts()

word_freq=word_freq.reset_index(name='freq').rename(columns={'index': 'word'})time: 30.4 s (started: 2022-03-01 12:15:42 +00:00)

Let’s check the top 50 words:

top_50_frequent_words = word_freq[:50]

top_50_frequent_words

time: 15.6 ms (started: 2022-03-01 12:16:13 +00:00)If you investigate the above top-50 frequent words carefully, you can notice that some words can be considered “stop words”, some others could be very informative for a classifier.

Let’s see which top-50 frequent words are in the stop-word list that we loaded at the beginning:

for each in top_50_frequent_words['word']:

if each in tr_stop_words.values:

print (each)bir

ve

için

bu

de

da

ama

ile

sonra

rağmen

önce

çok

kadar

daha

diye

gibi

yok

fakat

bana

time: 8.67 ms (started: 2022-03-01 12:16:13 +00:00)

Almost 20 words out of the top-50 words are stop words. Therefore, during preprocessing, we will take care of the stop-words.

Summary

In this part, we have explored the dataset and taken several actions and decisions:

- we removed the duplications and null values (if any)

- we observed that there are 32 topics and reviews are evenly distributed over these topics

- we decided the minimum and maximum review lengths

- we dropped the short reviews

In the next part, we will apply the text preprocessing by using the Keras TextVectorization layer.

Do you have any questions or comments? Please share them in the comment section.

Thank you for your attention!